Linear Models¶

There’s a few linear models out there that we felt were generally useful. This document will highlight some of them.

Least Absolute Deviation Regression¶

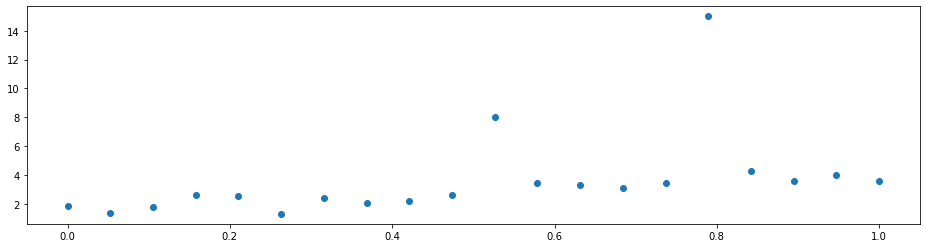

Imagine that you have a dataset with some outliers.

[1]:

import numpy as np

import matplotlib.pyplot as plt

np.random.seed(0)

X = np.linspace(0, 1, 20)

y = 3*X + 1 + 0.5*np.random.randn(20)

X = X.reshape(-1, 1)

y[10] = 8

y[15] = 15

plt.figure(figsize=(16, 4))

plt.scatter(X, y)

[1]:

<matplotlib.collections.PathCollection at 0x1f94cdc8b88>

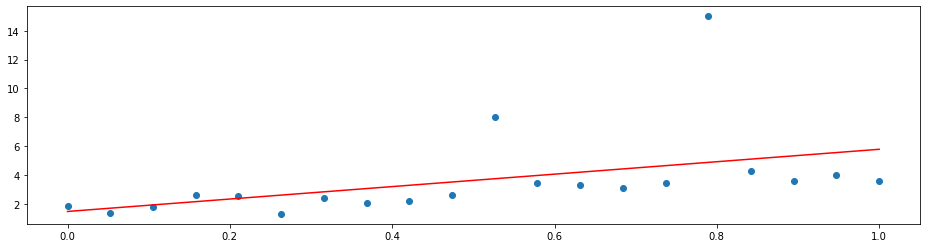

A simple linear regression will not do a good job since it is distracted by the outliers. That is because it optimizes the mean squared error

which penalizes a few large errors more than many tiny errors. For example, if y-model(x) = 4 for some single observation, the MSE here is 16. If there are two observations with y_1 - model(x_1) = 2 and y_2 - model(x_2) = 2, the MSE is 8 in total, which is less than for one larger error. Note that the sum of the errors is the same in both cases.

Hence, linear regression does the following:

[2]:

from sklearn.linear_model import LinearRegression

x = np.array([0, 1]).reshape(-1, 1)

plt.figure(figsize=(16, 4))

plt.scatter(X, y)

plt.plot(x, LinearRegression().fit(X, y).predict(x), 'r')

[2]:

[<matplotlib.lines.Line2D at 0x1f95eb48108>]

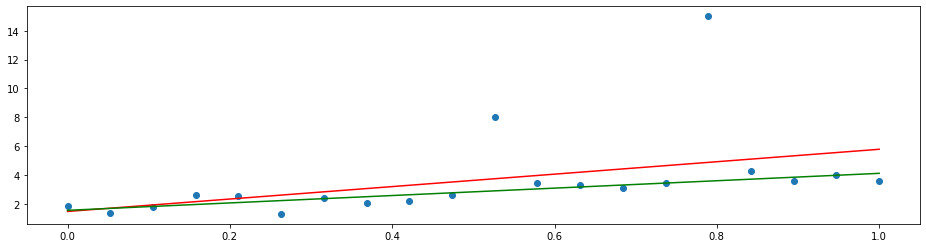

By changing the loss function to the mean absolute deviation

we can let the model put the same focus on each error. This yields the least absolute deviation (LAD) regression that tries to agree with the majority of the points.

[3]:

from sklearn.linear_model import LinearRegression

from skbonus.linear_model import LADRegression

x = np.array([0, 1]).reshape(-1, 1)

plt.figure(figsize=(16, 4))

plt.scatter(X, y)

plt.plot(x, LinearRegression().fit(X, y).predict(x), 'r')

plt.plot(x, LADRegression().fit(X, y).predict(x), 'g')

[3]:

[<matplotlib.lines.Line2D at 0x1f960952a08>]

See also¶

scikit-learn tackles this problem by offering a variety of robust regressors. Many of them use an indirect approach to reduce the effect of outliers. RANSAC, for example, samples random points from the dataset until it consists of all inliers. The closest thing to LADRegression that scikit-learn offers is the HuberRegressor with a loss function that is partly a squared and partly an absolute error. However, it is more complicated and requires hyperparameter tuning to unleash its full potential.

[ ]: